Plugging in: directly linking the brain to a computer

Brain-computer interfaces (BCIs) link the brain directly to external computers, allowing users to do something just by thinking it. In recent years, BCIs have moved from science fiction to something that could have real potential. At the University of Technology, Sydney, Australia, Professor Chin-Teng Lin is on the frontline of pioneering the development of such systems.

TALK LIKE A … COMPUTER SCIENTIST

Artificial intelligence (AI) — computer systems able to perform tasks normally requiring human intelligence

Augmented reality (AR) — technology that superimposes computer-generated images onto a user’s view of the real world

Brain-computer interface (BCI) — a direct communication pathway between the brain’s activity and an external device

Direct-sense BCI (DS-BCI) — BCI methods that operate directly and seamlessly from human cognition, rather than relying on external stimuli

Electroencephalography (EEG) — recording of brain activity through non-invasive sensors attached to the scalp that pick up the brain’s electrical signals

Machine learning — computer systems able to learn and adapt without following explicit instructions, through recognising and inferring patterns in data

Virtual reality (VR) — computer-generated simulations of environments that users can interact with in a way similar to real life

Brains and computers share lots of similarities. Both use electrical signals transmitted through complex networks to trigger a particular event. The human brain remains far more sophisticated than even the most advanced computer, but the gap is narrowing, and, with the help of other innovative technologies, the potential of linking up brains with computers becomes closer to a practical reality. The combination of human and machine intelligence has a staggering array of potential real-world applications, from the detection of cognitive illnesses or emotional states, to precise control of sophisticated machines, to the intuitive navigation of immersive virtual worlds.

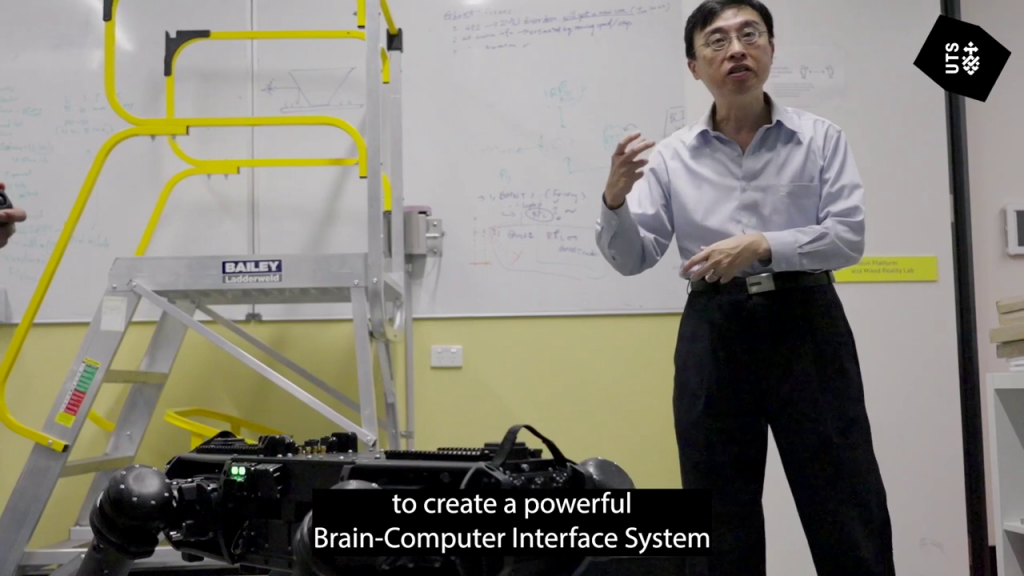

Professor Chin-Teng (CT) Lin works at the University of Technology Sydney. He has several decades of experience at the forefront of human-machine collaboration and sees a bright future for brain-computer interfaces. “Recent advances in AI, material sensor technologies and attention to user-friendliness in computer hardware have spurred BCI research, taking it from pure academia into the world of industry,” he explains.

How do BCIs work?

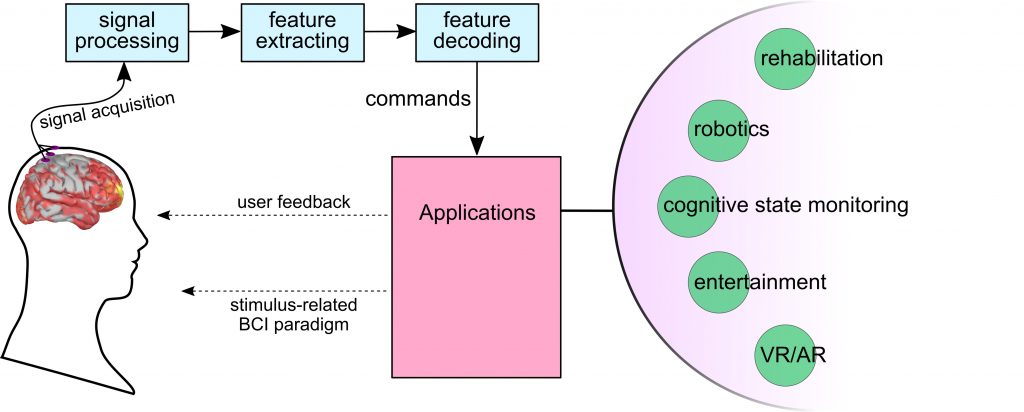

“BCIs provide a channel for humans to interact with external artificial devices through their brain activity,” says CT. “Usually, a machine learning algorithm decodes electrical signals in the brain to work out the user’s intentions and then transmits a ‘mental command’ to the device.” There are a variety of different sensor systems to pick up and interpret brain activity, each with their own pros and cons. Some measure the brain’s electrical signals, some measure its magnetic activity and others measure changes in blood oxygen.

“While some measurement techniques are non-invasive, others are invasive, meaning the sensors must be placed under the scalp,” says CT. “While invasive methods usually provide more precise measurements, they also have obvious disadvantages for the user.” Understandably, lots of people do not want electrical devices under their skin, and there are risks involved with prolonged or repeated use, which makes invasive methods unlikely to be widely taken up in society. “Research on invasive methods is mostly limited to animals at the moment and, although latest findings indicate they might be safe for human use, it’s unlikely that human implants will be approved any time soon,” says CT. “For the foreseeable future, non-invasive sensors are the only practical solution for investigating cognitive processes in the human brain.”

Limitations of BCIs

While BCIs have crossed the threshold from fiction to fact, uptake in the world outside the lab is slow. “Interacting with the real world via a computer is unnatural to humans,” says CT. “Furthermore, the response feedback produced by the computer is far slower than our brains, creating a delay.” The accuracy of machine learning provides another limitation, though this is a rapidly advancing field. “Recent progress in deep learning provides substantial potential benefits for neural networks, computer vision and BCI techniques,” says CT.

Reference

https://doi.org/10.33424/FUTURUM327

There are other practical drawbacks too. “The sensors that pick up signals from the brain still need improvement,” explains CT. “Most BCIs only work when the user is stationary, limiting their use in many real-world applications.” Because non-invasive methods, in particular, rely upon detecting quite subtle stimuli, any interference – such as the noise created by movement or physically interacting with an object – can affect their functioning. Staying motionless while ‘interacting’ with the world is unnatural to us and means such methods can only be used in controlled environments.

Wearable computers

We are already familiar with the concept of wearable computers, through VR headsets, AR glasses and other trendy tech. As well as their entertainment value, wearable computers also have many promising practical applications. “Wearable computers can offer highly immersive experiences for entertainment, health monitoring and research purposes, among many others,” says CT. “Their research applications are most exciting for us at present.”

Wearable computers have revolutionised the practicalities of BCI research. Until recently, BCI research has relied upon static and simple stimuli – presenting an object to a subject in a lab environment, for instance – which does not bear much relation to everyday life. “By using wearable computers, researchers can design, simulate and finely control experiments to examine human brain dynamics inside and outside the laboratory,” says CT. VR and AR can now create sophisticated scenarios similar to real life; by monitoring a subject’s brain activity when encountering these scenarios, results are far more meaningful in terms of relating findings to the real world.

Brainwave (EEG) dry sensors and headsets for BCI developed by CT Lin and his team

Direct-sense BCIs

Based on such technological advancements and their own innovations, CT and his team are working on a next-generation solution called direct-sense BCI (DS-BCI). “We are developing two systems within DS-BCI, based on speech and vision, to seamlessly decode the brain signals linked to our natural senses without additional stimulus,” he says. Such techniques are far closer aligned to our experiences of the real world than most BCI research. To make its measurements, the team is principally using non-invasive sensors that monitor electrical signals in the brain, the signal known as electroencephalography (EEG).

Direct-speech BCI aims to translate ‘silent speech’ from neural signals into system commands. “Currently, our EEG sensors can decode the intention conveyed when participants imagine themselves speaking,” explains CT. “This approach provides a novel channel of interaction with BCIs for any user and could be an important assistive tool for people not able to speak naturally.”

Direct-sight BCI detects what object is in a person’s mind based on their EEG signals as they look around an environment. “This is more innovative than current BCI methods, which rely mostly on stimulus onset – in other words, displaying a specific series of objects to the subject – while ours takes a more natural approach to interacting with one’s environment,” says CT. “The ability to actively recognise objects is an essential skill in daily lives, which is why this is an active research field.”

The possibilities for the future of BCIs are exciting to CT and his team. “Building a system that translates user intentions into BCI instructions has shifted from a distant goal to a feasible possibility,” says CT. “From our progress so far, we foresee a wearable system that can directly decode a user’s sensory data. The speed and capacity for natural interaction of these BCIs will bring this technology closer to real-life application.”

A typical BCI system configuration (copyright (2021) IEEE. Reprinted, with permission from (C. T. Lin and T. T. Nguyen Do, “Direct-Sense Brain-Computer Interfaces and Wearable Computers,” IEEE Transactions on Systems, Man, and Cybernetics – Systems, 50th Anniversary Issue, Vol. 51, No. 1, pp. 298-312, January 2021)

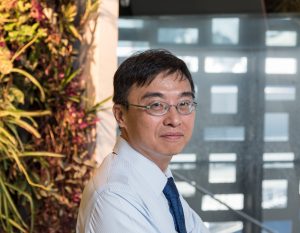

Professor Chin-Teng Lin

Professor Chin-Teng Lin

Co-Director, Australian AI Institute (AAII), Distinguished Professor, School of Computer Science, University of Technology Sydney (UTS), New South Wales, Australia

Fields of research: Computer Science, Artificial Intelligence (AI), Brain Computer Interfaces (BCIs)

Research project: Developing sophisticated direct-sense BCIs based on natural cognition to progress BCI technology towards real-world applications

Funder: This work was supported in part by the Australian Research Council (ARC) under discovery grant DP210101093 and DP220100803. Research was also sponsored in part by the Australian Defence Innovation Hub (DIH) under Contract No. P18-650825. The contents are solely the responsibility of the authors and do not necessarily represent the official views of ARC and DIH.

ABOUT COMPUTER SCIENCE AND BRAIN-COMPUTER INTERFACES (BCIs)

Distinguished Professor Chin-Teng Lin is a leading researcher in BCIs. He explains more about his research and how it combines understanding of the human brain with the latest technological developments.

“I study the human brain and the physiological changes that take place when cognition and behaviour is happening. In particular, I’m interested in ways to combine this physiological information with AI to develop monitoring and feedback systems.

“I want to improve the flow of information from humans to robots. This will help humans make better decisions and enable them to respond to complex, stressful situations with the assistance of machines. I believe such human-machine cooperation can deliver a common good for humanity.

“In 1992, I invented fuzzy neural networks (FNNs). A ‘fuzzy’ system is something that doesn’t always follow hard rules, such as human cognition. I introduced neural-network learning into these systems, allowing machines to learn and predict the approximate functions of such a system, essentially incorporating human-like reasoning into computers. Since then, I’ve developed a series of FNN models for different learning environments, with applications including cybersecurity and coordinating multiple drones at once.

“I founded the Computational Intelligence and Brain-Computer Interface Lab at UTS. The lab is developing mobile sensing technology to measure brain activity using non-invasive methods, to help assess human cognitive states.

“From 2010-2020, I led a large project with the US Army Research Lab. The project explored how to integrate a BCI when the user is in a moving vehicle or suffering cognitive fatigue, leading to the development of wearable EEG devices. In more recent years, I’ve worked with Australia’s Department of Defence to examine how to use brainwaves to command and control autonomous vehicles.”

Pathway from school to computer science

• For a career in computer science, commonly desirable subjects to study at school and post-16 include mathematics, computer science, statistics and physics. If you are interested in BCIs and similar technologies, biology or psychology may also prove useful.

• At university, CT suggests seeking courses or modules in areas such as linear algebra, algorithms and data science, signal processing, optimisation, neural networks, fuzzy logic and AI.

Explore careers in computer science

• The IEEE Computational Intelligence Society runs educational outreach programmes for high schools, competitions and webinars, and has a repository for educational materials.

• The UTS Faculty of Engineering and Information Technology runs innovative outreach programmes for schools.

• CT says the UTS Australian AI Institute and the Computational Intelligence and Brain-Computer Interface Lab both offer opportunities for students to get involved practically.

• Computer scientists are in high demand, and there are many opportunities available in academia, industry

and the public sector. According to www.au.talent.com/salary, the average annual salary for a computer scientist in Australia is AUS$103,000.

Meet CT

“As a child, I was fascinated by movies and toys featuring robots. This sowed the seeds for pursuing a career in finding ways for humans and machines to team up and work together to solve real-world issues.

“After university, I became specifically interested in ‘augmented human intelligence’. This led to me inventing the fuzzy neural network (FNN) models, which integrate high-level human-like thinking with low-level machine learning. This has since led to hundreds of thousands of scientific publications on FNNs from researchers around the world.

“Later on in my career, I realised that BCI advancement would rely on a deeper understanding of the human brain. I began researching brain science about 20 years ago, focusing on ‘natural’ cognition — how brains behave when people are performing ordinary tasks in real-life situations. This included developing non-invasive BCI headsets that subjects can wear.

“Now, I think it’s the right time to pursue my ultimate career goal: to augment human intelligence through BCI and AI. My dream is to develop the next-generation human-machine interface, allowing human brains and computers to truly work together and enhance one another in decision-making.”

CT’s top tips

1. When you’re working on ways to integrate computer science into society, interdisciplinary knowledge – such as having an understanding of life sciences and biomedical engineering – is useful.

2. Look into new research and the latest industry developments to keep abreast of progress and stay excited about the field. Think especially about real-life applications of such technologies.

Do you have a question for CT?

Write it in the comments box below and CT will get back to you. (Remember, researchers are very busy people, so you may have to wait a few days.)

Interesting research indeed. Though the need to improve the quality of the audio-visual aspects of BCI’s is certainly there. I am more interested in knowing about directly projecting audio-visual and sensual information to the human-brain. How far away are we from achieving this feat? Any insights on that?