Using AI and computer science as a force for social good

Professor Zhigang Zhu is a computer scientist based at The City College of New York in the US. He and his collaborators have established the SAT-Hub project, which aims to provide better location-aware services to underserved populations with minimal infrastructure changes

TALK LIKE A COMPUTER SCIENTIST

ASSISTIVE TECHNOLOGY – assistive, adaptive, and rehabilitative devices for people with a disability or the elderly population

ARTIFICIAL INTELLIGENCE – intelligence demonstrated by machines

ETHICAL – relating to whether something is morally acceptable

LIDAR – a method for determining ranges by targeting an object with a laser and measuring the time for the reflected light to return to the receiver

GPS (GLOBAL POSITIONING SYSTEM) – a global navigation satellite system that provides location, velocity and time synchronisation

AUGMENTED REALITY – an interactive experience of a real-world environment where the objects that reside in the real world are enhanced by computer-generated perceptual information

For those of us without visual impairment, it is easy to take everyday tasks for granted. When we walk around our local town or village, moving from A to B is straightforward; equally, when we navigate our homes, walking between rooms or floors is a task that we perform almost without thought. However, for blind or visually impaired (BVI) people, moving through indoor environments is not as simple or straightforward.

The World Health Organisation estimates that at least 2.2 billion people have a vision impairment. Of these, more than 285 million people have low vision and over 39 million people are blind. Indoor navigation tools do exist to assist these people, but, typically, these tools have been designed for use by autonomous robots. When robots move around inside a space, they use sensors and other technologies to determine where there is a wall, barrier or step. This information is relayed to the robot and it can change course or position.

However, in recent times, researchers have started to look at how these solutions might be used or modified to assist BVI people, similar to how GPS systems work for outdoor navigation. The hope is that BVI people will be better able to navigate indoors and overcome some of the challenges associated with visual impairment. Professor Zhigang Zhu is a computer scientist based at the City College of New York. His work is focused on the development of a Smart and Accessible Transportation Hub (SAT-Hub) for Assistive Navigation and Facility Management. One of the areas of focus is to help BVI people navigate an environment with a smartphone and without the need for additional hardware devices.

THE APP

Chief among the innovations that Zhigang has worked on is the Assistive Sensor Solutions for Independent and Safe Travel (ASSIST) of Blind and Visually Impaired People. The team has developed two ASSIST app prototypes. “The first app is based on a 3D sensor called Tango on an Android phone, while the second app is simply based on the onboard camera on an iPhone,” explains Zhigang. “We used the Augmented Reality (AR) features of both types of phones for 3D modelling, but the key features of our apps are the fast modelling of a large-scale indoor environment without much special training for a modeller, a client-server architecture that allows the modelling to scale up, a hybrid sensor solution for accurate real-time indoor positioning, and, finally (and probably most importantly), a personalised route-planning method and customised user interfaces tailored to the needs of various users with travelling challenges.”

ROUTE PLANNING

The ASSIST app uses both Bluetooth low energy (BLE) beacons to inform users which region they are in and the on-board camera to track their locations accurately and in real-time. Incredibly, the algorithms that the route planner uses can take information from 3D models of an environment, including landmarks, whether there is data connectivity in a location, the crowd density of a particular area, whether any building or construction works are taking place, or other personal user preferences, such as whether they require stairs or elevators. This information is pooled together and then considered by the app to provide the best route for each specific user.

OTHER USERS

The team has also considered people with Autism Spectrum Disorder (ASD), where the crowd density feature is particularly useful. Understanding how busy any given area is can be useful to everyone using the technology the team has developed, but people with ASD often struggle with large crowds, so this feature is especially important for them.

TESTING

The team has tested the SAT-Hub solutions with both BVI and ASD users. “The first step was to hold focus group studies with users. Fortunately, we have user study experts on our team (Cecilia Feeley at Rutgers for Autism Transportation, Celina M. Cavalluzzi at Goodwill NY/NJ for ASD training, and Bill Seiple at Lighthouse Guild for BVI services),” says Zhigang. “Secondly, we modelled the headquarters of Lighthouse Guild and ran test routes with our users. Eventually, our goal is to install the system in a transportation centre for a larger scale test.”

CHALLENGES

There are ethical issues and privacy concerns relating to the potential intrusive nature of the sensors and cameras used to help users. The team is mindful of this and aims to provide users with unobtrusive and inclusive interfaces with universal design principles. “Since onboard sensors and cameras are used as users’ ‘eyes’ and surveillance cameras are used for crowd analysis, ethical and privacy concerns need to be addressed,” explains Zhigang. “Our human subject studies are approved by the Institutional Review Board (IRB) of the City College of New York.”

There are technical challenges relating to making the ASSIST apps from an augmented reality (AR) tool work in a small area, through to a robust and real-time application that can be scaled up to a much larger area, such as a campus or even an entire city. There is also the challenge of ensuring user interfaces are personalised for different groups. To overcome these problems, the team is focused on performing cross-disciplinary collaborations across industry, academia and government.

A TEAM EFFORT

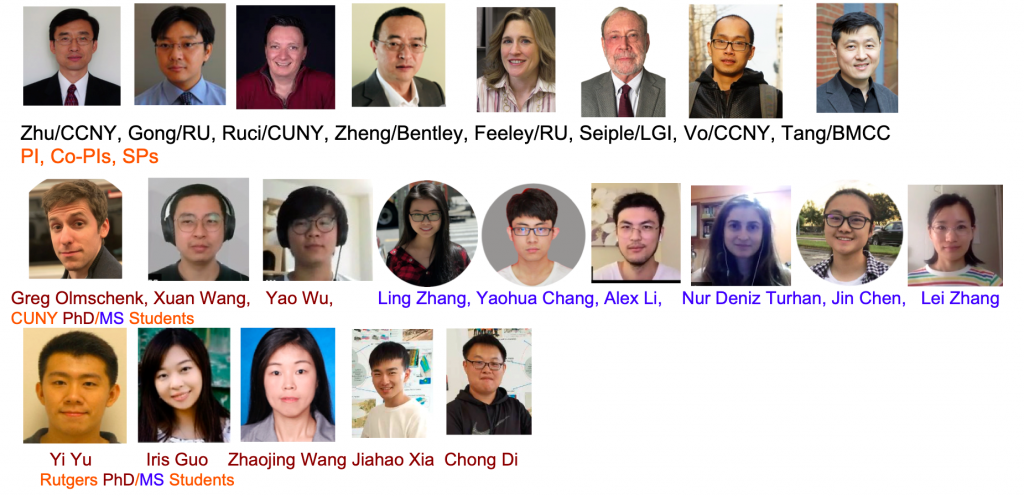

The team consists of experts in AI/machine learning (Zhigang Zhu at City College of New York, Hao Tang at Borough of Manhattan Community College), facility modelling and visualisation (Jie Gong at Rutgers – Co-Lead of the SAT-Hub Project, Huy Vo at City College of New York), urban transportation management and user behaviours (Cecilia Feeley at Rutgers, Bill Seiple at Lighthouse Guild), customer discovery (Arber Ruci at City University New York), and industrial partnerships (Zheng Yi Wu at Bentley Systems, Inc.). More importantly, many talent students at various stages of their studies (from high school to college to master to PhD) are contributing to and making ideas reality.

THE NEXT STEPS

The Co-Principal Investigator, Arber Ruci, is leading a team on an NSF I-Corps training course to bring the ASSIST technology to market. Jin Chen began her research in Zhigang’s lab during her sophomore year and is the entrepreneurial lead of the I-Corps team. The project is an integration of security, transportation and assistance and the goal is to develop a solution for a safe, efficient and enjoyable travelling experience for all. The new development of the navigation app, iASSIST, works with a regular on-board camera of an iPhone to perform similar functions as the 3D sensor of the ASSIST app.

Reference

https://doi.org/10.33424/FUTURUM146

ASSISTIVE TECHNOLOGY – assistive, adaptive, and rehabilitative devices for people with a disability or the elderly population

ARTIFICIAL INTELLIGENCE – intelligence demonstrated by machines

ETHICAL – relating to whether something is morally acceptable

LIDAR – a method for determining ranges by targeting an object with a laser and measuring the time for the reflected light to return to the receiver

GPS (GLOBAL POSITIONING SYSTEM) – a global navigation satellite system that provides location, velocity and time synchronisation

AUGMENTED REALITY – an interactive experience of a real-world environment where the objects that reside in the real world are enhanced by computer-generated perceptual information

For those of us without visual impairment, it is easy to take everyday tasks for granted. When we walk around our local town or village, moving from A to B is straightforward; equally, when we navigate our homes, walking between rooms or floors is a task that we perform almost without thought. However, for blind or visually impaired (BVI) people, moving through indoor environments is not as simple or straightforward.

The World Health Organisation estimates that at least 2.2 billion people have a vision impairment. Of these, more than 285 million people have low vision and over 39 million people are blind. Indoor navigation tools do exist to assist these people, but, typically, these tools have been designed for use by autonomous robots. When robots move around inside a space, they use sensors and other technologies to determine where there is a wall, barrier or step. This information is relayed to the robot and it can change course or position.

However, in recent times, researchers have started to look at how these solutions might be used or modified to assist BVI people, similar to how GPS systems work for outdoor navigation. The hope is that BVI people will be better able to navigate indoors and overcome some of the challenges associated with visual impairment. Professor Zhigang Zhu is a computer scientist based at the City College of New York. His work is focused on the development of a Smart and Accessible Transportation Hub (SAT-Hub) for Assistive Navigation and Facility Management. One of the areas of focus is to help BVI people navigate an environment with a smartphone and without the need for additional hardware devices.

THE APP

Chief among the innovations that Zhigang has worked on is the Assistive Sensor Solutions for Independent and Safe Travel (ASSIST) of Blind and Visually Impaired People. The team has developed two ASSIST app prototypes. “The first app is based on a 3D sensor called Tango on an Android phone, while the second app is simply based on the onboard camera on an iPhone,” explains Zhigang. “We used the Augmented Reality (AR) features of both types of phones for 3D modelling, but the key features of our apps are the fast modelling of a large-scale indoor environment without much special training for a modeller, a client-server architecture that allows the modelling to scale up, a hybrid sensor solution for accurate real-time indoor positioning, and, finally (and probably most importantly), a personalised route-planning method and customised user interfaces tailored to the needs of various users with travelling challenges.”

ROUTE PLANNING

The ASSIST app uses both Bluetooth low energy (BLE) beacons to inform users which region they are in and the on-board camera to track their locations accurately and in real-time. Incredibly, the algorithms that the route planner uses can take information from 3D models of an environment, including landmarks, whether there is data connectivity in a location, the crowd density of a particular area, whether any building or construction works are taking place, or other personal user preferences, such as whether they require stairs or elevators. This information is pooled together and then considered by the app to provide the best route for each specific user.

OTHER USERS

The team has also considered people with Autism Spectrum Disorder (ASD), where the crowd density feature is particularly useful. Understanding how busy any given area is can be useful to everyone using the technology the team has developed, but people with ASD often struggle with large crowds, so this feature is especially important for them.

TESTING

The team has tested the SAT-Hub solutions with both BVI and ASD users. “The first step was to hold focus group studies with users. Fortunately, we have user study experts on our team (Cecilia Feeley at Rutgers for Autism Transportation, Celina M. Cavalluzzi at Goodwill NY/NJ for ASD training, and Bill Seiple at Lighthouse Guild for BVI services),” says Zhigang. “Secondly, we modelled the headquarters of Lighthouse Guild and ran test routes with our users. Eventually, our goal is to install the system in a transportation centre for a larger scale test.”

CHALLENGES

There are ethical issues and privacy concerns relating to the potential intrusive nature of the sensors and cameras used to help users. The team is mindful of this and aims to provide users with unobtrusive and inclusive interfaces with universal design principles. “Since onboard sensors and cameras are used as users’ ‘eyes’ and surveillance cameras are used for crowd analysis, ethical and privacy concerns need to be addressed,” explains Zhigang. “Our human subject studies are approved by the Institutional Review Board (IRB) of the City College of New York.”

There are technical challenges relating to making the ASSIST apps from an augmented reality (AR) tool work in a small area, through to a robust and real-time application that can be scaled up to a much larger area, such as a campus or even an entire city. There is also the challenge of ensuring user interfaces are personalised for different groups. To overcome these problems, the team is focused on performing cross-disciplinary collaborations across industry, academia and government.

A TEAM EFFORT

The team consists of experts in AI/machine learning (Zhigang Zhu at City College of New York, Hao Tang at Borough of Manhattan Community College), facility modelling and visualisation (Jie Gong at Rutgers – Co-Lead of the SAT-Hub Project, Huy Vo at City College of New York), urban transportation management and user behaviours (Cecilia Feeley at Rutgers, Bill Seiple at Lighthouse Guild), customer discovery (Arber Ruci at City University New York), and industrial partnerships (Zheng Yi Wu at Bentley Systems, Inc.). More importantly, many talent students at various stages of their studies (from high school to college to master to PhD) are contributing to and making ideas reality.

THE NEXT STEPS

The Co-Principal Investigator, Arber Ruci, is leading a team on an NSF I-Corps training course to bring the ASSIST technology to market. Jin Chen began her research in Zhigang’s lab during her sophomore year and is the entrepreneurial lead of the I-Corps team. The project is an integration of security, transportation and assistance and the goal is to develop a solution for a safe, efficient and enjoyable travelling experience for all. The new development of the navigation app, iASSIST, works with a regular on-board camera of an iPhone to perform similar functions as the 3D sensor of the ASSIST app.

PROFESSOR ZHIGANG ZHU

PROFESSOR ZHIGANG ZHU

Herbert G. Kayser Professor of Computer Science, The Grove School of Engineering, The City College of New York and The CUNY Graduate Center, USA

FIELD OF RESEARCH: Computer Science

RESEARCH =: Using AI for social good – transforming a large transportation hub into a smart and accessible hub to serve people with transportation challenges, such as passengers with visual or mobility impairment.

FUNDERS: US National Science Foundation, US Office of the Director of National Intelligence (ODNI) via Intelligence Community Center for Academic Excellence (IC CAE) at Rutgers, US Department of Homeland Security (DHS), Bentley Systems, Inc.

PROFESSOR ZHIGANG ZHU

PROFESSOR ZHIGANG ZHU

Herbert G. Kayser Professor of Computer Science, The Grove School of Engineering, The City College of New York and The CUNY Graduate Center, USA

FIELD OF RESEARCH: Computer Science

RESEARCH =: Using AI for social good – transforming a large transportation hub into a smart and accessible hub to serve people with transportation challenges, such as passengers with visual or mobility impairment.

FUNDERS: US National Science Foundation, US Office of the Director of National Intelligence (ODNI) via Intelligence Community Center for Academic Excellence (IC CAE) at Rutgers, US Department of Homeland Security (DHS), Bentley Systems, Inc.

ABOUT COMPUTER SCIENCE

Computer science pervades almost every area of our lives in some way. Ever since Charles Babbage first described what he called his ‘Analytical Engine’ in 1837, the science of computing has been developing, and impacting our lives.

As with anything that leads humanity and society into unchartered territory, there are ethical concerns about what developments in the field of computer science and AI could lead to. However, Zhigang and his team’s work shows that computer science and AI can be used as a force for good.

Zhigang chose computer science as his major in college after his high school teacher told him in the 1980s that computing would be at the frontier of the 20th and 21st century. “In the early stage of my career, I was mostly motivated to pursue innovative and cutting-edge research, so I entered into the field of AI and computer vision,” says Zhigang. “More and more, I felt a distinctive calling to use AI and assistive technologies to improve the quality of life for people, especially those who are mentally or physically challenged.

Zhigang believes that, managed correctly, AI can be used for social good. “I am working on assistive technologies to help people who are blind or visually impaired, or with ASD, among other conditions,” explains Zhigang. “However, AI for social good can be used in many more applications, solving health, humanitarian and environmental challenges.”

As Zhigang’s research shows, AI and computer science can lead to significant improvements in the lives of visually impaired people, as well as ASD users. If you want to make a real positive difference to people’s lives, computer science could be the path for you, especially as technology and its potential applications continue to grow.

EXPLORE A CAREER IN COMPUTER SCIENCE

• Zhigang recommends the Association for Computing Machinery’s website as a major source of important information for those interested in research and education within the field: https://www.acm.org/

• The IEEE Computer Society website contains a wealth of information: https://www.computer.org/

• The average salary for a computer scientist in the United States is $106,000, depending on the level of experience: https://www.indeed.com/career/computerscientist/salaries

PATHWAY FROM SCHOOL TO COMPUTER SCIENCE

Zhigang is a firm believer in studying many different branches of mathematics throughout your schooling and college to give you the breadth of understanding necessary for a career in computer science and data science. He also advocates achieving a solid background in critical thinking to ensure you can keep up with the pace of change that is a hallmark of the field.

https://www.prospects.ac.uk/careers-advice/what-can-i-do-with-my-degree/computer-science

HOW DID ZHIGANG BECOME A COMPUTER SCIENTIST?

I was interested in reading as a child. The materials were scarce in the rural area where I grew up, but I read whatever I could find and tried to connect the dots.

Becoming a scientist was a call to service. When I was young, we were behind in science and technology in the country I grew up in, so I thought doing science and technology, especially computer science, was something I should dive into to make a contribution.

Purpose has made me successful. It doesn’t necessarily have to result in a career or fame, but having a sense of doing something good, in whatever capacity I have, motivates me. I have also maintained a certain level of curiosity in discovery and do not give up easily.

If I fail in one thing, I might pick two more interesting things to pursue – it is important to be doing something meaningful and enjoyable instead of regretting what I failed at. My personal faith also helps me to overcome obstacles by tuning myself to higher callings.

I will never regret switching from autonomous robotics to assistive technology in order to help people in need, even though I have to learn a lot of new things, collaborate with people from different fields and, in some sense, be willing to perform certain degrees of services. I made sincere friends – both collaborators and users – who truly appreciate and understand each other.

MEET JIN CHEN

Jin Chen is a Graduate Research Assistant who worked on the SAT-Hub project as a student and is now leading a project to bring SAT-Hub to the market.

I am the leading developer of the ASSIST app in the IOS version. I implemented the app using the techniques we developed in our research, including hybrid modelling and a transition method for real-time indoor positioning without delays. I developed and tested the landmark extraction method and personalised route planning method, and designed the customised user interfaces with my colleague, Lei Zhang. I am the Entrepreneurial Lead of the NSF Innovation Corps (I-Corps) team, where I focus on investigating the commercial path for our innovation. This includes understanding customer segments and marketing opportunities for our technology through discussion with people from various fields.

Working on this project as a student improved my communication skills and taught me how to talk to people from different backgrounds. I had the opportunity to work with experts from different fields and understand their perspectives – from both business and technical points of view. I also expanded my technical knowledge in computer vision and software development.

Leading a project, the most important thing is to have a business mindset and maintain good communication with the team. Our team is great at keeping everyone updated, with flexible weekly meeting times and organised task tracking. I have learned to adapt to the rapid changes and adjust our tasks based on new requests. I have also learned new business language and how best to develop our technology from a business perspective.

Our goal is to help people travel safely and independently in an unfamiliar environment, especially people with disabilities. In order to bring this technology to market, we also need to consider what benefits we can bring to business, so we can attract investment in our technology. The major challenge is to determine the relationship between ourselves and investors and how to make it a profitable venture.

The technology proved its success in accurate real-time user localisation and helped navigate the user to their destination based on their needs. Now, we are discovering the connection between our technology and the market. Once we discover the use case for our application, we will perform a series of tests and find partners for our business. This customer discovery journey should take several months. If everything goes according to plan, our application will be on the market within the next year or two.

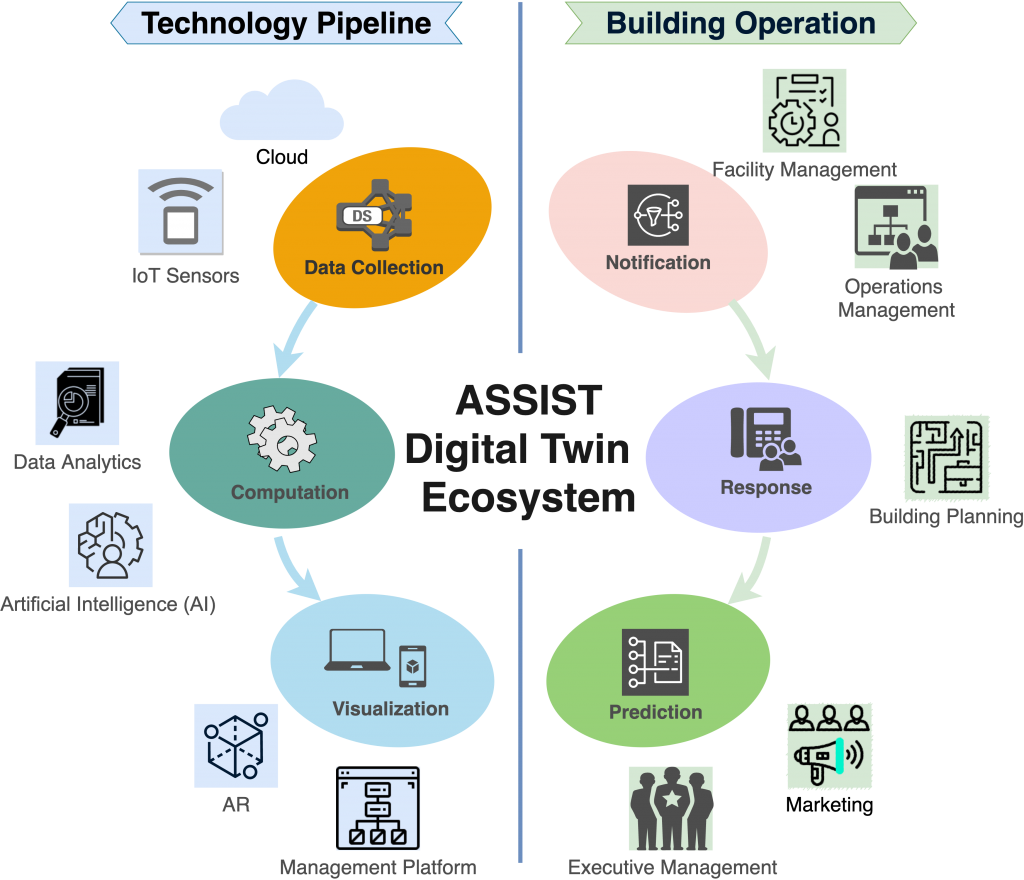

Our I-Corps team wants to expand the ASSIST app into a digital twin application that will integrate the IoT sensors, cloud computing and analytic models for collecting and analysing real-time data, such as visitors’ locations and traffic information. These data can help provide better navigation experience for visitors and help businesses manage and operate their facilities. We aim to explore our digital market entry point during the I-Corps training and find the best use case for our application and our business model.

ZHIGANG’S TOP TIPS

01 For computer science and data science, you will need to have a solid foundation in maths, from theory through to applications, so keep this in mind throughout your studies and choose your modules wisely!

02 If you do something you love, motivation comes far more naturally – and motivation is essential for conducting research across your career!

03 Computer science programmes have specific course requirements, but there are subjects I consider fundamental: calculus, linear algebra, data structures and algorithms.

Write it in the comments box below and Zhigang or Jin will get back to you. (Remember, researchers are very busy people, so you may have to wait a few days.)